Since the release of GPT-3.5, Gen AI has captured global attention and has sparked excitement about its various applications to different domains including the efficiency gains resulting from them. The corporate world was no different, from chatbots drafting emails to agents browsing excel sheets to find specific data points, the narrative in 2024–2025 was that large language models would transform business.

However as we approach the end of Q3 2025, the enterprise landscape is defined by a stark and costly paradox: The GenAI Divide. Despite an estimated $30-40 billion in corporate spending on Generative AI, a landmark 2025 report from MIT's NANDA (State of AI in Business 2025) initiative reveals that 95% of these investments have yielded zero measurable business returns.

This report was based on a systematic review of over 300 publicly disclosed AI initiatives, structured interviews with representatives from 52 organizations, and survey responses from 153 senior leaders collected across four major industry conferences. It found that about 95 percent of enterprise AI pilots fail to reach production or deliver a return (When this report was released the market saw a sizeable hiccup). This gap between widespread adoption and minimal transformation has become known as the GenAI divide.

High adoption, Low transformation

The study paints a picture of eager experimentation paired with disappointing outcomes. While more than 80% of organizations explored or piloted general‑purpose tools like ChatGPT and Microsoft Copilot, and half of them have successfully deployed these solutions, these tools are primarily concerned with the enhancement of personal productivity.

However, when it came to enterprise‑grade systems like custom solutions or vendor‑sold software designed to embed AI into workflows, only about 20% of organizations progressed to the pilot stage and just 5% of integrated AI pilots are extracting millions in value. In this case large companies and younger startups are "excelling" with AI because they pick one pain point, execute well, and partner smartly with companies who use their tools.

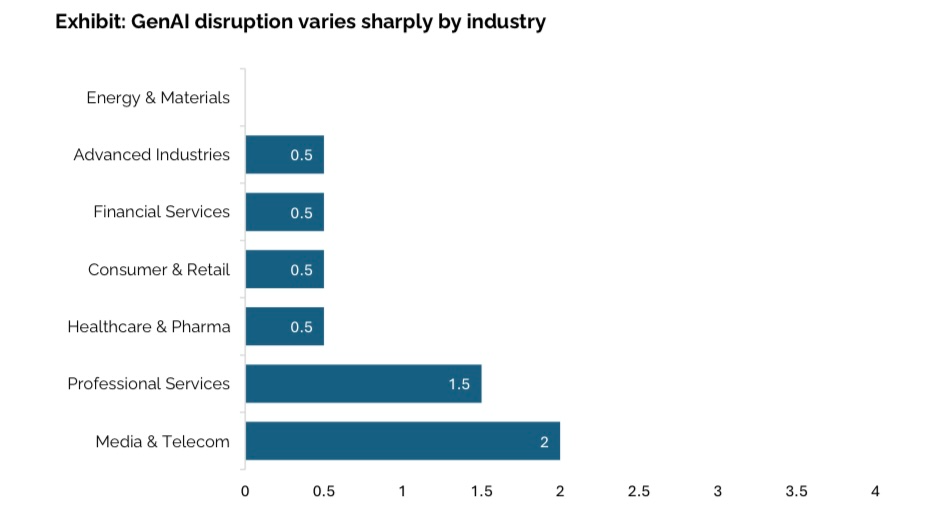

The study’s composite AI disruption index, where each industry was scored from 0 to 5 based on observable indicators like market share volatility, revenue growth of AI‑native firms founded after 2020 and the emergence of new AI-driven business models, shows that only two industries (technology and media) exhibit meaningful structural disruption. Seven of nine sectors, including healthcare, energy and advanced industries, show little to no change.

This disconnect is not due to lack of enthusiasm. Large enterprises are actually leading in pilot count; mid‑market organizations tend to be more decisive, moving from pilot to full deployment in about 90 days compared with nine months or more for big firms. Yet the intensity of enterprise interest has not translated into success. Generic consumer tools are widely adopted because they are easy to try and flexible; but when organizations invest millions in specialised systems, those solutions often prove brittle, ill‑suited to real workflows and lacking the ability to learn or adapt. This is the essence of the GenAI divide: high adoption but low transformation.

Patterns behind the divide

To understand why so few GenAI pilots progress beyond the experimental phase, the study surveyed both executive sponsors and frontline users across 52 organizations and were asked to rate common barriers to scale. Through these interviews, the results revealed a predictable leader: resistance to adopting new tools. But also four recurring patterns were identified that explained why most organizations remain on the wrong side of the divide:

- Model output quality concern: users readily rely on LLMs for simple tasks, but in enterprise settings they report inconsistent or brittle outputs when the model lacks domain context or memory of prior corrections.

- Poor user experience: tools that require re-entering extensive context, break on edge cases, or fail to integrate with the systems people already use quickly get abandoned.

- Investment bias: budgets are skewed toward highly visible front‑office functions such as sales and marketing, while back‑office automation often yields better return on investment.

- Implementation advantage: external partnerships see twice the success rate of internal builds, a point echoed by other analysts such as the Real Story Group, which notes that strategic partnerships achieve a 66 percent deployment rate compared with 33 percent for in‑house efforts.

All of this paints a picture that the main obstacle is not model horsepower, regulation, infrastructure, or raw talent; it’s that most enterprise tools lack memory, contextual adaptation, and workflow fit. In short, they don’t learn. When systems fail to retain feedback and improve over time, users disengage, pilots stall, and production never arrives. The result is visible activity without structural change.

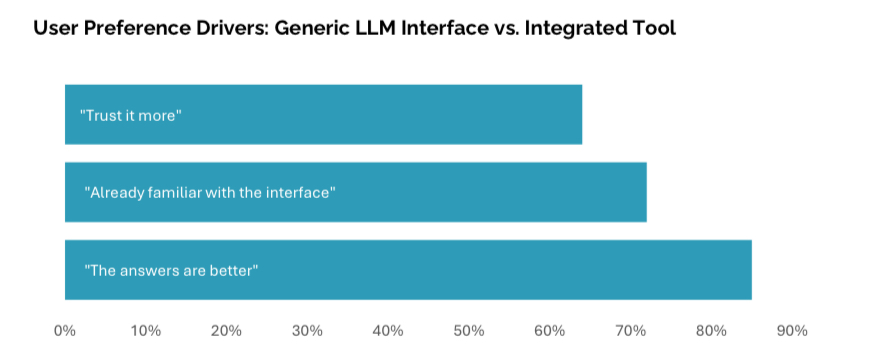

Adding to this the divide also manifests itself in user preferences: ChatGPT beats enterprise tools because it's better, faster, and more familiar, even when both use similar models. But this same preference exposes why organizations remain stuck on the wrong side of the divide.

The professionals interviewed also expressed a striking contradiction, while skeptic about enterprise AI tools, they also often were heavy users of consumer LLM interfaces. Because their $20 per-month general-purpose tool often outperforms bespoke enterprise systems costing orders of magnitude more, at least in terms of immediate usability and user satisfaction. This paradox exemplifies why most organizations remain on the wrong side of the GenAI Divide.

Misaligned investment priorities

When asked to allocate a hypothetical spend for AI applications across different functions companies directed around 70% of their budgets to sales and marketing. Marketing teams invested in AI‑generated emails, smart lead scoring and content personalization. Operations and finance functions received far less funding despite offering clearer and faster returns. Case studies in the report show that back‑office automation can eliminate $2–10 million in business process outsourcing costs and reduce external agency spend by 30 percent. These savings often occur without cutting internal staff; instead, companies save by insourcing work they previously outsourced.

But why does this misallocation persist? Sales and marketing outcomes (e.g., demo volume or email response rates) are visible and tie directly to board‑level KPIs. Legal, procurement and finance improvements are harder to quantify. Trust and social proof also shape purchase decisions. Procurement leaders rely heavily on referrals from peers and existing vendors rather than evaluating function or features. The result is a cycle where resources flow to visible but less transformative use cases, all which reinforce the GenAI divide.

What enterprises actually want

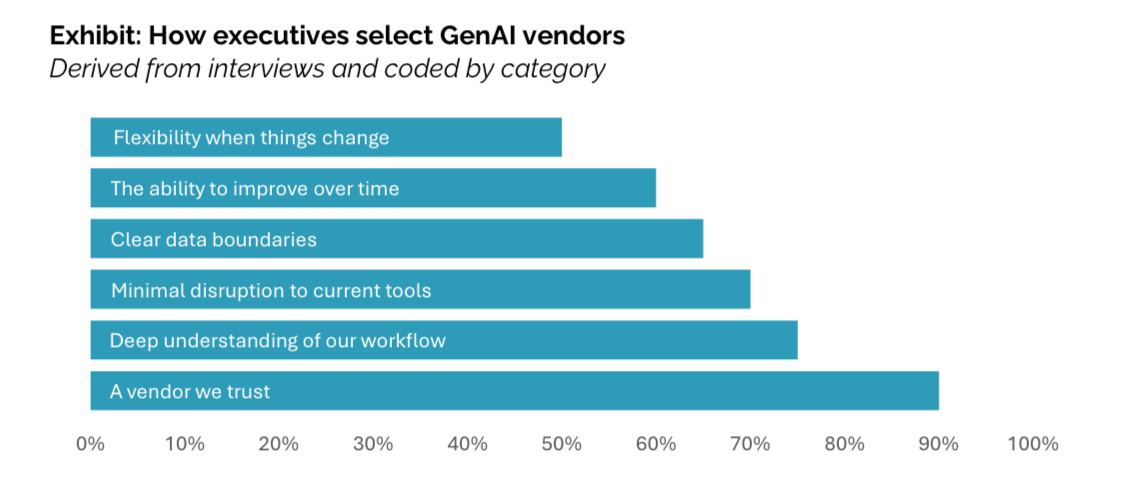

The most successful vendors understand that crossing the GenAI Divide requires building systems that executives repeatedly emphasized: AI systems that do not just generate content, but learn and improve within their environment. When evaluating AI tools, buyers consistently emphasized a specific set of priorities.

Across those same interviews they expressed that they wanted:

- Deep workflow integration: the tools must plug into the systems people already live in (CRM/ERP/IDP, ticketing, knowledge bases) and minimise disruption to current processes. Integration ease is a gating factor, not a “nice to have.”

- Clear data boundaries: leaders want to know what goes where: data residency, isolation, retention, fine-grained permissions, auditability, and a kill switch. Ambiguity equals delay.

- Systems that can learn in production: systems should retain context, incorporate feedback, and improve through use closing the loop so quality compounds rather than resets every session.

- Flexibility as processes evolve: Business logic, forms, approval chains, and metrics shift quarterly. Tools that require re-implementation to keep up will be abandoned.

- A vendor they could trust: Existing partners and credible referrals matter. When stakes are high, buyers favour vendors who know their domain and can prove durable support.

Surprisingly, in the study concerns about workforce impact were far less common than anticipated. Most users welcomed automation, especially for tedious, manual tasks, as long as data remained secure and outcomes were measurable. Despite interest in AI, there is notable skepticism toward emerging vendors, especially in high-trust or regulated workflows. Many procurement leaders told us they ignore most startup pitches, regardless of innovation.

"We receive dozens of pitches daily about AI-powered procurement tools. However, our established BPO partner already understands our policies and processes. We're more likely to wait for their AI-enhanced version than switch to an unknown vendor." Said a head of procurement at a Global CPG.

How to cross the divide

Startups that successfully cross the GenAI Divide land small, visible wins in narrow workflows, then expand. Tools with low setup burden and fast time-to-value consistently outperform heavy enterprise builds. Because enterprise buyers rely on trust signals, channel referrals and peer proof are powerful growth levers.

Two execution strategies that work:

- Start narrow and embed in adjacent, low-risk processes then scale rapidly. Focus on non-critical or adjacent processes where you can apply significant customization without risking core operations. Prove value quickly and scale into core workflows only after the wins are undeniable. Tools that succeed here share two traits: low configuration burden and immediate, visible value. In contrast, tools that demand extensive enterprise customization up-front tend to stall at pilot.

- Make learning-in-production a product feature.

Persist case memory, capture human edits as feedback, and close the loop with outcome signals (accepted drafts, escalations, SLA hits). This is how quality compounds and trust grows.

Where startups are winning now

- Voice AI for call summarization and routing

- Document automation for contracts and forms

- Code generation for repetitive engineering tasks

But they still struggle with use cases that posses complex internal logic, opaque decision support, or proprietary heuristics often hit adoption friction due to deep enterprise specificity.

From Pilots to Memory: choosing the winning stack

Agentic AI and memory frameworks like MCP or LangChain will decide which vendors help enterprises cross the GenAI divide and which ones will remain stuck. Buyers are now demanding systems that adapt over time: Copilot and Dynamics 365 are adding persistent memory and feedback loops, and ChatGPT’s memory signals the same expectation for general-purpose tools. The window is narrow: in many verticals, pilots are already underway, and over the next 18 months enterprises will lock in learning-capable stacks that create compounding switching costs.

Startups that move fastest by shipping adaptive agents that learn from feedback, usage, and outcomes, and by embedding deeply in workflows can build durable moats through data and integration depth.

For buyers, the pattern is clear: act like BPO clients, not SaaS tourists. Prioritise strategic partnerships, front-line adoption, and operational KPIs; treat deployment as co-evolution with vendors. Under the hood, emerging protocols (MCP, A2A, NANDA) are enabling an Agentic Web where specialised agents interoperate, replacing monolithic apps with dynamic coordination layers. Choose now—and choose systems that learn in your environment.

%20(10).png)

.png)